Yihao Feng @yihaocs

research scientist at AIML @Apple ;Ex AI Researcher @SFResearch; Ph.D alumni UT Austin @UTCompSci . Reinforcement learning, diffusion model and LLMs. Palo Alto, CA Joined September 2013-

Tweets217

-

Followers103

-

Following478

-

Likes3K

Great work showing prompt synthesis as a new scaling axis for reasoning. Good training data is scarce. This work showcases a framework that might make it possible to construct high-quality training problems for reasoning-focused LLMs. Technical details below:

Language Models that Think, Chat Better "This paper shows that the RLVR paradigm is effective beyond verifiable domains, and introduces RL with Model-rewarded Thinking (RLMT) for general-purpose chat capabilities." "RLMT consistently outperforms standard RLHF pipelines. This…

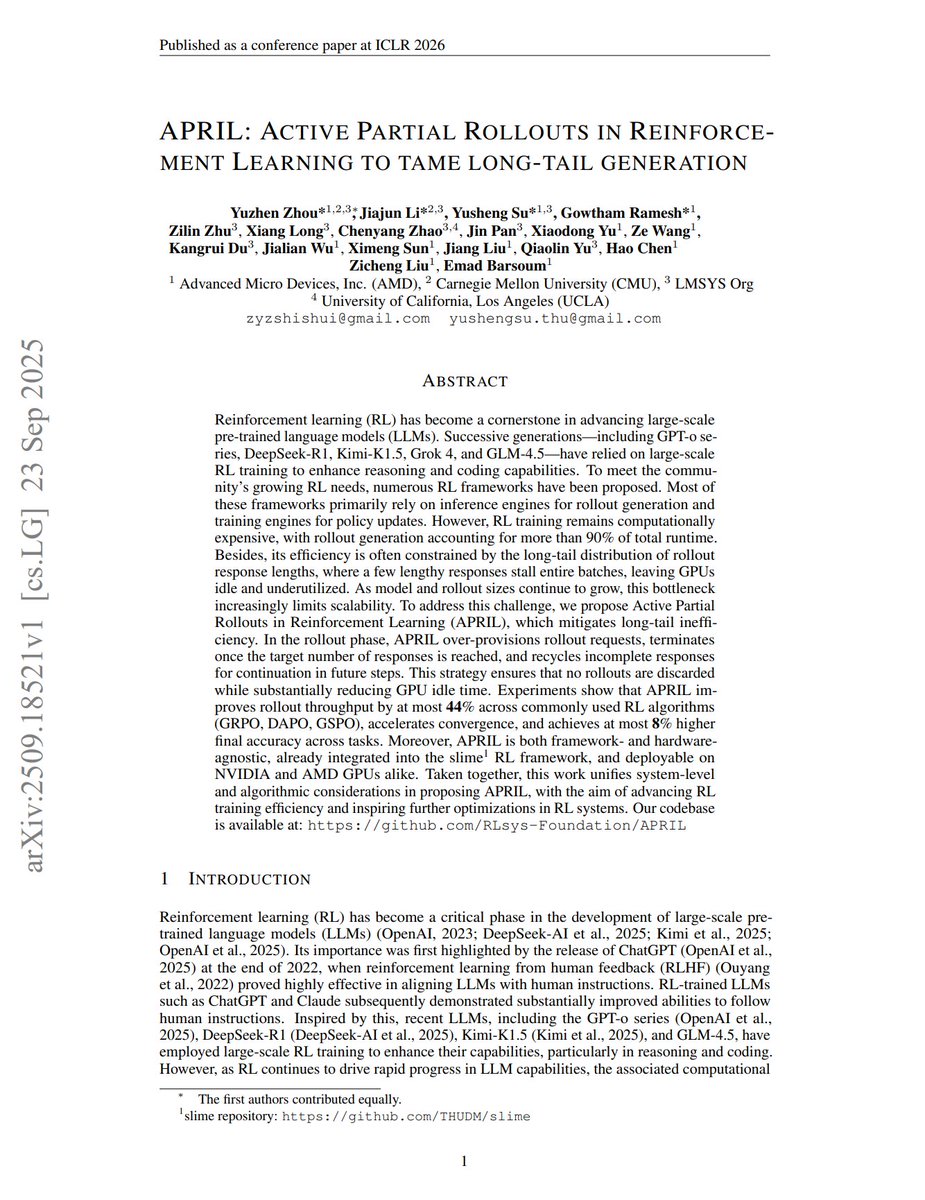

APRIL: Active Partial Rollouts in Reinforcement Learning to tame long-tail generation "we propose Active Partial Rollouts in Reinforcement Learning (APRIL), which mitigates long-tail inefficiency." "Experiments show that APRIL improves rollout throughput by at most 44% across…

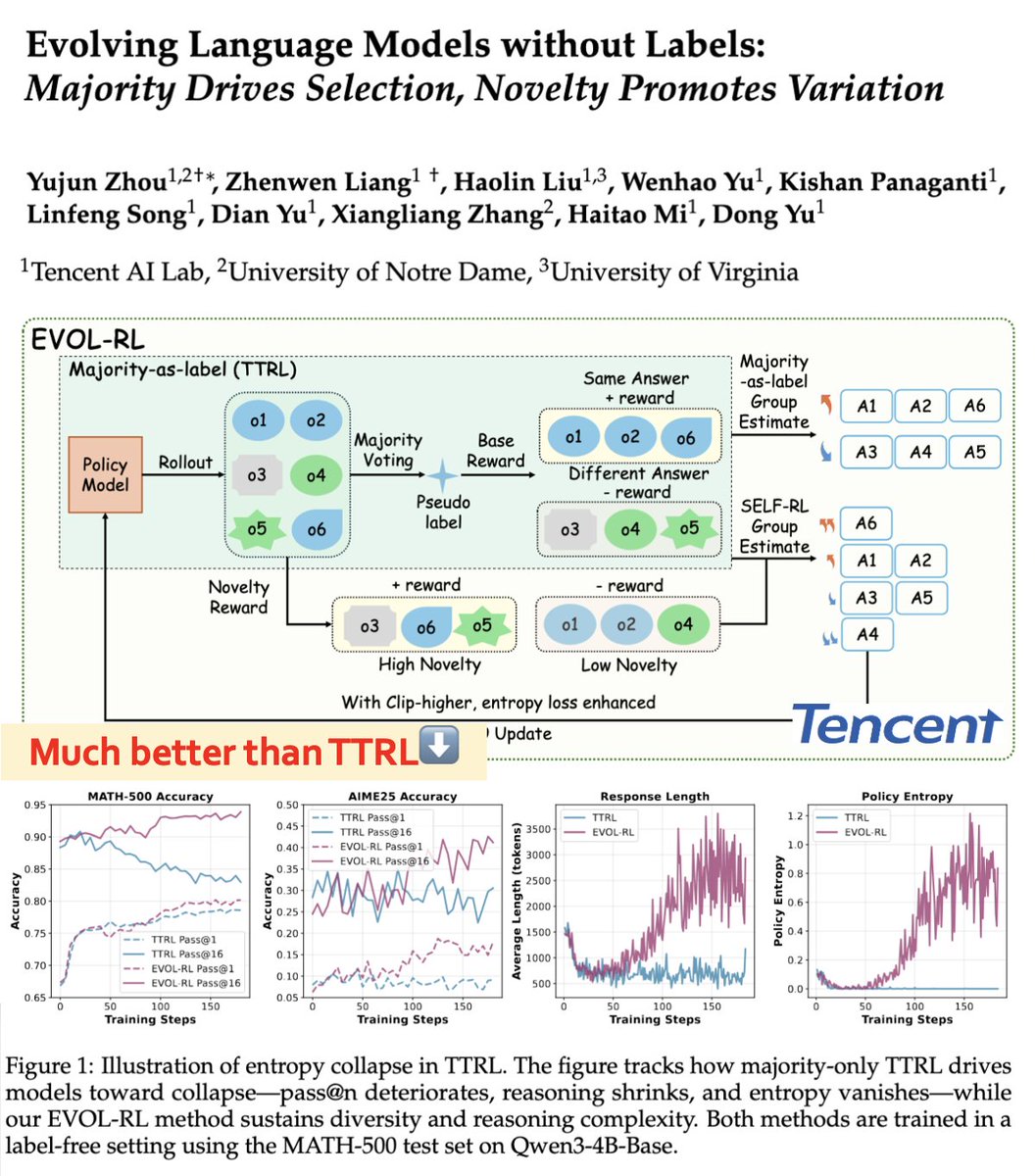

Also strongly recommend this paper on diversity reward in RL! The insights line up closely -- well worth reading together. https:// arxiv.org/abs/2509.15194 (Tencent) https:// arxiv.org/abs/2509.02534 (Meta) Not sure which diversity reward wins out 😀 (embedding vs…

Also strongly recommend this paper on diversity reward in RL! The insights line up closely -- well worth reading together. https:// arxiv.org/abs/2509.15194 (Tencent) https:// arxiv.org/abs/2509.02534 (Meta) Not sure which diversity reward wins out 😀 (embedding vs… https://t.co/HTu1NzJMdz

RL often cause 𝐞𝐧𝐭𝐫𝐨𝐩𝐲 𝐜𝐨𝐥𝐥𝐚𝐩𝐬𝐞: generations become shorter, less diverse, and brittle. A simple fix is 𝐝𝐢𝐯𝐞𝐫𝐬𝐢𝐭𝐲 reward to boost exploration. I use it in many of my projects — surprisingly effective! Details in our NEW paper: arxiv.org/abs/2509.15194

What strikes me in the work is that as long as the data recipe is right everything can just work with RL, generalizes super well, even at 1.7B level. Even people said it’s hard to improve RL’ed qwen models, we just did it! Thanks @_akhaliq for featuring my work the third time…

What strikes me in the work is that as long as the data recipe is right everything can just work with RL, generalizes super well, even at 1.7B level. Even people said it’s hard to improve RL’ed qwen models, we just did it! Thanks @_akhaliq for featuring my work the third time…

Very cool thread about whether LLMs can multi hop reason without CoT or not. If you're curious, read the full thread, it's well written and clearly answers.

Very cool thread about whether LLMs can multi hop reason without CoT or not. If you're curious, read the full thread, it's well written and clearly answers.

The puzzle: * Synthetic + real fact: ✓ works * Synthetic + synthetic: ✗ fails * Synthetic facts in same training document or in-context: ✓ works

High quality math is the secret sauce for reasoning models. The best math data is in old papers. But OCRing that math is full of insane edge cases. Let's talk about how to solve this, and how you can get better math data than many frontier labs 🧵

🚨 We’ve just published a recipe to train a frontier-level deep research agent using RL. With just 30 hours on an H200, any developer can now beat Sonnet-4 on DeepResearch Bench using open-source tools. (Thread 🧵)

New in-depth blog post - "Inside vLLM: Anatomy of a High-Throughput LLM Inference System". Probably the most in depth explanation of how LLM inference engines and vLLM in particular work! Took me a while to get this level of understanding of the codebase and then to write up…

Microsoft presents rStar2-Agent Agentic Reasoning Technical Report rStar2-Agent boosts a pre-trained 14B model to state of the art in only 510 RL steps within one week, achieving average pass@1 scores of 80.6% on AIME24 and 69.8% on AIME25, surpassing DeepSeek-R1 (671B) with…

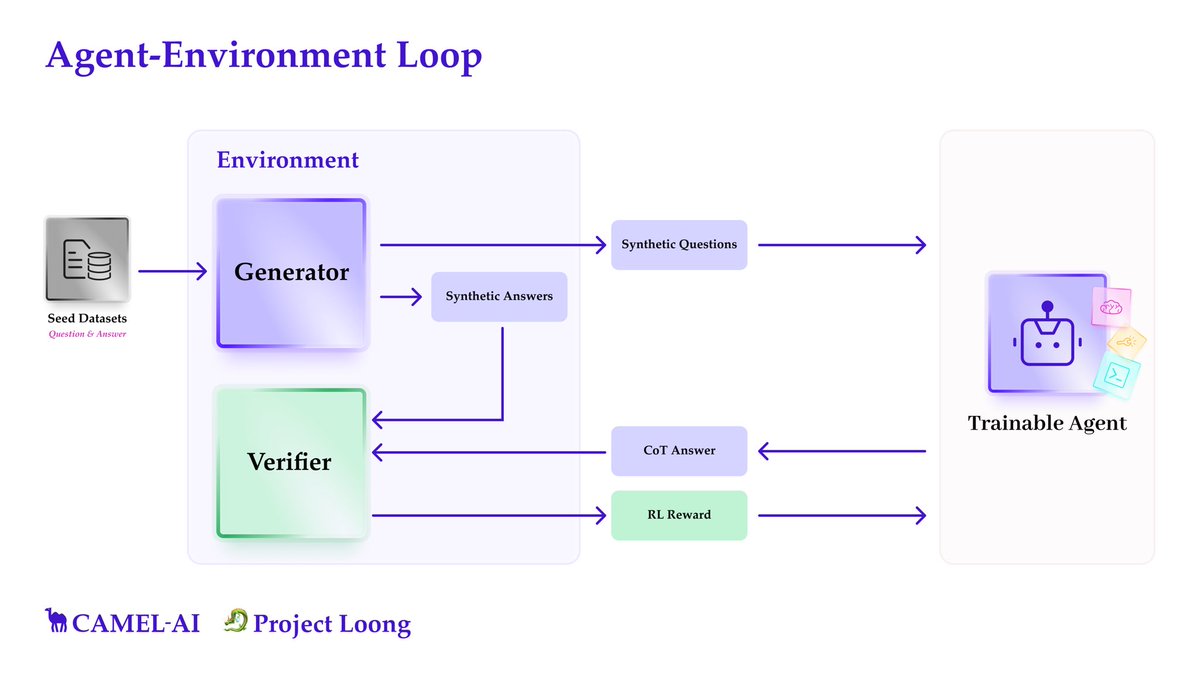

Sir, we built this. A RL environment for learning reasoning at scale. GitHub: github.com/camel-ai/loong HF dataset: huggingface.co/datasets/camel… We extracted seed datasets from sources like textbooks, code libraries like sympy, networkX, Gurobi (math programming lib), rdkit…

Sir, we built this. A RL environment for learning reasoning at scale. GitHub: github.com/camel-ai/loong HF dataset: huggingface.co/datasets/camel… We extracted seed datasets from sources like textbooks, code libraries like sympy, networkX, Gurobi (math programming lib), rdkit… https://t.co/yGb1jIGEz8

Depth-Breadth Synergy in RLVR: Unlocking LLM Reasoning Gains with Adaptive Exploration "We dissect the popular GRPO algorithm and reveal a systematic bias: the cumulative-advantage disproportionately weights samples with medium accuracy, while down-weighting the low-accuracy…

We were able to reproduce the strong findings of the HRM paper on ARC-AGI-1. Further, we ran a series of ablation experiments to get to the bottom of what's behind it. Key findings: 1. The HRM model architecture itself (the centerpiece of the paper) is not an important factor.…

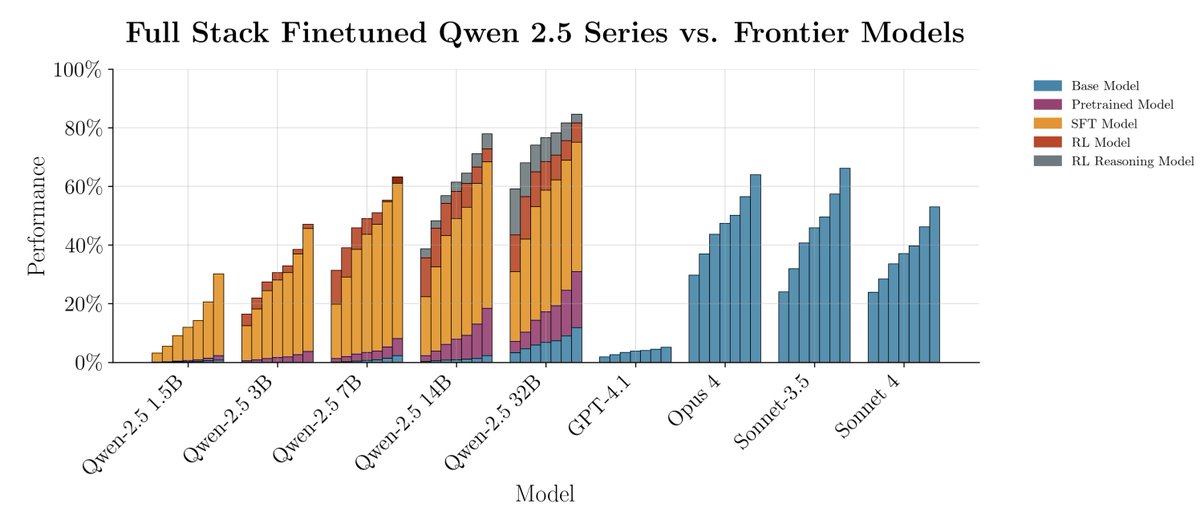

super cool to see this come together, incredible work spearheaded by @brendanh0gan, all-in-all an incredibly detailed recipe of what it takes to craft a specialist model for OOD tasks where frontier models really struggle paper/weights/data/code in brendan’s thread :)

super cool to see this come together, incredible work spearheaded by @brendanh0gan, all-in-all an incredibly detailed recipe of what it takes to craft a specialist model for OOD tasks where frontier models really struggle paper/weights/data/code in brendan’s thread :)

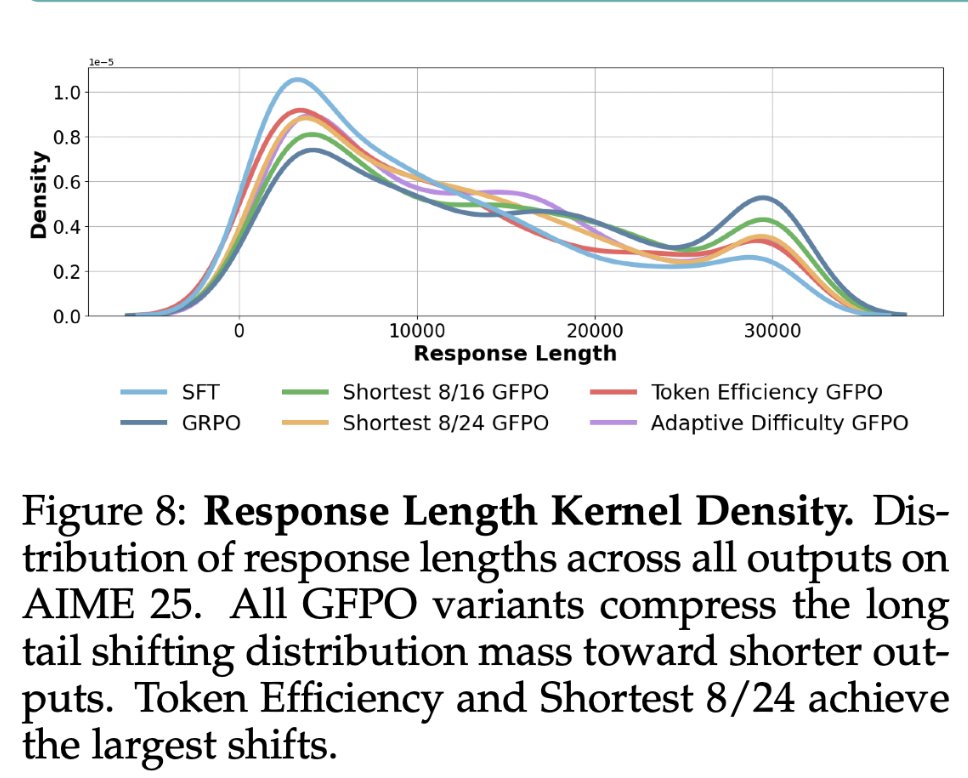

A neat observation: Rejection sampling during GRPO allows you to directly factor in properties in your reward, and allows you to go from optimizing max_model Expected Reward(response) to max_model E {Reward(response) * Property(response)} iIn our case it's "small length", but…

A neat observation: Rejection sampling during GRPO allows you to directly factor in properties in your reward, and allows you to go from optimizing max_model Expected Reward(response) to max_model E {Reward(response) * Property(response)} iIn our case it's "small length", but…

moving from vllm v0 to v1 made our async rl training crash! read how we fixed it we recently migrated from v0 to v1 as part of a larger refactor of prime-rl to make it easier-to-use, more performant and naturally async. we confirmed correct training dynamics on many…

Nice empirical paper investigating all your bag of tricks in reasoning LLMs arxiv.org/abs/2508.08221

🥳Thrilled to introduce SWE-Swiss! 🚀Our 32B model achieves 60.2% on SWE-bench, matching the performance of much larger models (DeepSeek-R1-0528, Kimi-dev-72B). Better methods, not just bigger models! 📑Notion: pebble-potato-fc6.notion.site/SWE-Swiss-A-Mu… 💻Github: github.com/zhenyuhe00/SWE…

Adithya Bhaskar @AdithyaNLP

350 Followers 349 Following Third year CS PhD candidate at Princeton University (@princeton_nlp @PrincetonPLI), previously CS undergrad at IIT Bombay

Matthew Reams @skwert001

51 Followers 290 Following Post-token symbolic AI in GPT-5. Stateless. Verdict logic: 🟢 ⚠️ 🔴 No memory. No simulation. Contradictions collapse. You align by refusing to lie.

Kaiyu Yang @KaiyuYang4

4K Followers 2K Following Research Scientist at @Meta Fundamental AI Research (FAIR), New York. Previously: Postdoc @Caltech, PhD @PrincetonCS, Undergrad @Tsinghua_Uni.

Huihan Liu @huihan_liu

3K Followers 841 Following PhD @UTAustin | 👩🏻-in-the-Loop Learning for 🤖 | prev @AIatMeta @MSFTResearch @berkeley_ai | undergrad @UCBerkeley 🐻

Wei Ping @_weiping

2K Followers 336 Following distinguished research scientist @nvidia | post-training, RL, multimodal | generative models for audio. Views are my own.

Jieyu Zhao @jieyuzhao11

3K Followers 827 Following Assistant Prof. @CSatUSC, @USC || Postdoc @ClipUMD || PhD from @UCLANLP, @UCLA. #NLP, #ML, #TrustworthyNLP

Ruiyi Zhang @RoyZhang13

131 Followers 243 Following Research Scientist @Adobe @AdobeResearch| Machine Learning Ph.D. @DukeU | previously @GoogleBrain @AlibabaGroup

Liangke Gui @liangkegui

89 Followers 115 Following GenMedia researcher @GoogleDeepMind, AI PhD @CarnegieMellon

ElvaCrofts @o228CqY54X01iW

83 Followers 1K Following

leloy! @leloykun

7K Followers 4K Following Math @ AdMU • NanoGPT speedrunner • Muon fan 🤍 • prev ML @ XPD • 2x IOI & 2x ICPC • https://t.co/nfO038itfn

Junbo Li @ljb121002

174 Followers 480 Following Ph.D. student @UTCompSci, Undergrad from @FudanUni Math School.

FayYoung @LdicIV1ttHVm0v

76 Followers 7K Following

fl quan @fl_quan

36 Followers 1K Following

jordan ezra @JordanFisherEzr

242 Followers 3K Following Research at Anthropic. Mathematician, former fed, lapsed founder. Noodling on how to make all this AI stuff go right.

VirginiaMorton @CDCE0wWuY81Ivi

78 Followers 7K Following

Casper Hansen @casper_hansen_

10K Followers 460 Following NLP Scientist | AutoAWQ Creator | Open-Source Contributor

Mu Cai @MuCai7

2K Followers 1K Following Research Scientist @GoogleDeepMind, Gemini Multimodal. Previous: Ph.D. @WisconsinCS | Intern @MSFTResearch @Cruise

Sirrtesh @SirrteshyxZX

35 Followers 4K Following

Javi Buitrago 🚢 @javbuitrago

372 Followers 2K Following Talent @ideogram_ai • prev @playground_ai & @meta

NydiaPritt @Pee69OfD0VQMv

55 Followers 7K Following

LemonGroves @LiEvan21202

13 Followers 147 Following

Tealyth @tealyth52971

103 Followers 7K Following

Bowen Peng @bloc97_

1K Followers 81 Following

Julia @U2z0O98ZjArsu9

88 Followers 7K Following Building on the XRP ledger. ♥️ Wife, kids, 🦜 & programming (TS, nodejs, Linux ..)

Sameep Vani @SameepVani

13 Followers 288 Following MS CS at @ASU | @ApgAsu | Graduate Researcher | Vision and Language | T2I | Large Vision Language Models

Alpay Ariyak @AlpayAriyak

3K Followers 3K Following Post-Training Lead @ Together AI | OpenChat Project Lead (#1 7B LLM on Arena for 2+ months, 2M+ downloads) | DeepCoder, DeepSWE

Akshara Prabhakar @aksh_555

411 Followers 739 Following applied scientist @SFResearch | prev @princeton_nlp, @surathkal_nitk

Deatet @deatet22166

102 Followers 7K Following

Kaixuan Huang @KaixuanHuang1

1K Followers 930 Following PhD Student @Princeton; Google PhD Fellowship 2024, Ex-Intern @GoogleDeepMind; undergrad @PKU1898. opinions my own

Philippe Laban @PhilippeLaban

1K Followers 701 Following Research Scientist @MSFTResearch. NLP/HCI Research.

EnidWoolley @K89ZhYPE136a6oT

63 Followers 7K Following

Ashutosh Mehra @ashutoshmehra

2K Followers 7K Following Senior Principal Scientist at Adobe. Working on Acrobat AI Assistant, LLMs, and document ML.

Bo Dai @daibond_alpha

3K Followers 795 Following Assistant Professor at @gtcse, Research Scientist at @GoogleDeepMind | ex @googlebrain

aanand @aanandnayyar

106 Followers 3K Following

Deshas @Deshas179890

103 Followers 7K Following

Zheng Yuan @GanjinZero

993 Followers 784 Following Seed-Prover, Lean-Workbook, RRHF, RFT and MATH-Qwen. @BytedanceTalk Prev @Alibaba_Qwen, Phd at @Tsinghua_Uni

Prasann Singhal @prasann_singhal

299 Followers 749 Following 1st-year #NLProc PhD at UC Berkeley working with @sewon__min / @JacobSteinhardt , formerly advised by @gregd_nlp

Michal Valko @misovalko

8K Followers 8K Following Building something new · Chief Models Officer @ Stealth Startup & Inria & MVA - Ex: Llama @AIatMeta Gemini and BYOL @GoogleDeepMind

Wenpeng_Yin @Wenpeng_Yin

1K Followers 3K Following Assistant Professor at Penn State, State College Department of Computer Science and Engineering

Ankur Bohra @AnkurBohra9

46 Followers 4K Following

Chen Xing @LynetteSohn

54 Followers 175 Following Deep Learner @ScaleAI, previous research scientist @ Salesforce Research, spent my PhD in Google, Mila and Microsoft Research.

Wenhu Chen @WenhuChen

23K Followers 674 Following AI researcher. Interested in Reasoning, Multimodal. I direct TIGER-Lab. Author of PoT, MMMU, MMLU-Pro, MAmmoTH, LongRAG, MAP-Neo, YuE, VL-Rethinker

Tongyi Lab @Ali_TongyiLab

9K Followers 20 Following We advance the development of AGI and foster open source collaboration towards a smarter future.

Songlin Yang @SonglinYang4

14K Followers 3K Following research @MIT_CSAIL @thinkymachines. work on scalable and principled algorithms in #LLM and #MLSys. in open-sourcing I trust 🐳. she/her/hers

Susan Zhang @suchenzang

34K Followers 679 Following @ Google Deepmind. Past: @MetaAI, @OpenAI, @unitygames, @losalamosnatlab, @Princeton etc. Always hungry for intelligence.

Brendan Hogan @brendanh0gan

2K Followers 635 Following ml research scientist @morganstanley || phd in cs @cornell 2024

verl project @verl_project

1K Followers 5 Following Open RL library for LLMs. https://t.co/Xpaq0thhgi Join us on https://t.co/uWI5Zbd6IH

Mark Gurman @markgurman

428K Followers 2K Following Breaking News on Apple & Tech. Bloomberg Managing Editor. @UMSI Board Member. Send secure tips on Signal: markgurman.01 or email [email protected].

Shuchao Bi @shuchaobi

13K Followers 692 Following Research @Meta Superintelligence Labs, RL/post-training/agents; Previously Research @OpenAI on multimodal and RL; Opinions are my own.

Ahmad Al-Dahle @Ahmad_Al_Dahle

20K Followers 106 Following #Girldad of twins. Leading GenAI @ Meta (llama, imagine, meta ai and more)

kalomaze @kalomaze

19K Followers 2K Following ML researcher (@primeintellect), speculator • extremely silly jester

leloy! @leloykun

7K Followers 4K Following Math @ AdMU • NanoGPT speedrunner • Muon fan 🤍 • prev ML @ XPD • 2x IOI & 2x ICPC • https://t.co/nfO038itfn

Peiyi Wang @sybilhyz

11K Followers 300 Following PhD @PKU1898; Researcher @deepseek_ai; Recent: DeepSeek-R1/CoderV2/Math/V1/V2/V3, Mathshepherd, FairEval, Speculative Decoding.

Ajay Jain @ajayj_

7K Followers 4K Following Co-founder @genmoai. Co-created denoising diffusion (DDPM), DreamFusion, Dream Fields. Ex Ph.D. @berkeley_ai, @googleai, @facebookai, @nvidiaai, @mit

Zhe Gan @zhegan4

3K Followers 345 Following Research Scientist and Manager @Apple AI/ML. Ex-Principal Researcher @Microsoft Azure AI. Working on building vision and multimodal foundation models.

Zyphra @ZyphraAI

6K Followers 20 Following

You Jiacheng @YouJiacheng

9K Followers 2K Following a big fan of TileLang 关注TileLang喵!关注TileLang谢谢喵! https://t.co/utshC0jrCO 十年老粉

Soham De @sohamde_

2K Followers 1K Following Research Scientist at DeepMind. Previously PhD at the University of Maryland.

Bowen Peng @bloc97_

1K Followers 81 Following

emozilla @theemozilla

7K Followers 1K Following catholic, ai researcher, co-founder/ceo of @NousResearch alignment: whatever the opposite of yudkowsky + bryan johnson is. blessed be God in all his designs.

Nando Fioretto @nandofioretto

2K Followers 759 Following Assistant Professor of Computer Science at @UVA. I work on machine learning, optimization, and Responsible AI (differential privacy & fairness).

Dibya Ghosh @its_dibya

3K Followers 455 Following @AnthropicAI | Made friends along the way @UCBerkeley @ Google Brain Montreal, @physical_int

Alpay Ariyak @AlpayAriyak

3K Followers 3K Following Post-Training Lead @ Together AI | OpenChat Project Lead (#1 7B LLM on Arena for 2+ months, 2M+ downloads) | DeepCoder, DeepSWE

Dylan Foster 🐢 @canondetortugas

3K Followers 1K Following Foundations of RL/AI @MSFTResearch. Previously @MIT @Cornell_CS https://t.co/vQIdUzsw8B RL Theory Lecture Notes: https://t.co/bhgL3aKIk0

Samyam Rajbhandari @samyamrb

246 Followers 81 Following Principal Architect @SnowflakeDB co-founder @MSFTDeepSpeed

Devendra Chaplot @dchaplot

13K Followers 441 Following Building next-gen AI at @thinkymachines. Past: Founding team @MistralAI, RS at Facebook AI Research. Ph.D. @SCSatCMU, BTech @iitbombay CS.

Summer Yue @summeryue0

6K Followers 372 Following Safety and alignment at Meta Superintelligence. Prev: VP of Research at Scale AI, research at Google DeepMind / Brain (Gemini, LaMDA, RL / TFAgents, AlphaChip).

Jan Leike @janleike

115K Followers 332 Following ML Researcher @AnthropicAI. Previously OpenAI & DeepMind. Optimizing for a post-AGI future where humanity flourishes. Opinions aren't my employer's.

CHAI AI. William Beau... @chai_research

190K Followers 135 Following CHAI Founder/CEO. ✝️ CHAI : Chat AI platform, performing research in conversational generative artificial intelligence. https://t.co/SRbYKFJeh6

Song Mei @Song__Mei

3K Followers 689 Following Assistant Professor at UC Berkeley, Department of Statistics and EECS. Researcher at OpenAI working on LLM training.

Shizhe Diao @shizhediao

4K Followers 2K Following Research Scientist @NVIDIA focusing on efficient post-training of LLMs. Finetuning your own LLMs with LMFlow: https://t.co/UTykmQBwFr Views are my own.

Dinghuai Zhang 张鼎... @zdhnarsil

4K Followers 2K Following Researcher at @MSFTResearch. Prev: PhD at @Mila_Quebec, intern at @Apple MLR and FAIR Labs @MetaAI, math undergraduate at @PKU1898.

Zihui Xue @sherryx90099597

299 Followers 173 Following

Ashutosh Mehra @ashutoshmehra

2K Followers 7K Following Senior Principal Scientist at Adobe. Working on Acrobat AI Assistant, LLMs, and document ML.

Alexandr Wang @alexandr_wang

333K Followers 838 Following chief ai officer @meta, founder @scale_ai. rational in the fullness of time

Chun-Liang Li @chunliang_tw

420 Followers 157 Following Machine learning researcher @ Apple MLR Affiliate Assistant Professor @ UW CSE

Suhail @Suhail

388K Followers 509 Following Founder: @mixpanel, next: 🤖🦾🦿 Pizzatarian, programmer, music maker

Yi-01.AI @01AI_Yi

9K Followers 73 Following A global company building generative AI LLM and applications

Kelvin Xu @imkelvinxu

1K Followers 901 Following Interested in things that generalize. Currently Super Intelligence @Meta. Prev: Science of Scaling co-TL @GoogleDeepmind. PhD Student at UC Berkeley. 🇺🇸🇨🇦

Dacheng Li @DachengLi177

1K Followers 781 Following Student Lead @NovaSkyAI, PhD @BerkeleySky, @lmsysorg, @lmarena_ai | Prev: @Nvidia @Google @SCSatCMU | @istoica05 @profjoeyg @songhan_mit @haozhangml @ericxing

Zheng Yuan @GanjinZero

993 Followers 784 Following Seed-Prover, Lean-Workbook, RRHF, RFT and MATH-Qwen. @BytedanceTalk Prev @Alibaba_Qwen, Phd at @Tsinghua_Uni