Today, let's learn how to fine-tune OpenAI's latest gpt-oss locally. We'll give it multilingual reasoning capabilities as shown in the video. We'll use: - @UnslothAI for efficient fine-tuning. - @huggingface transformers to run it locally. Let's begin!

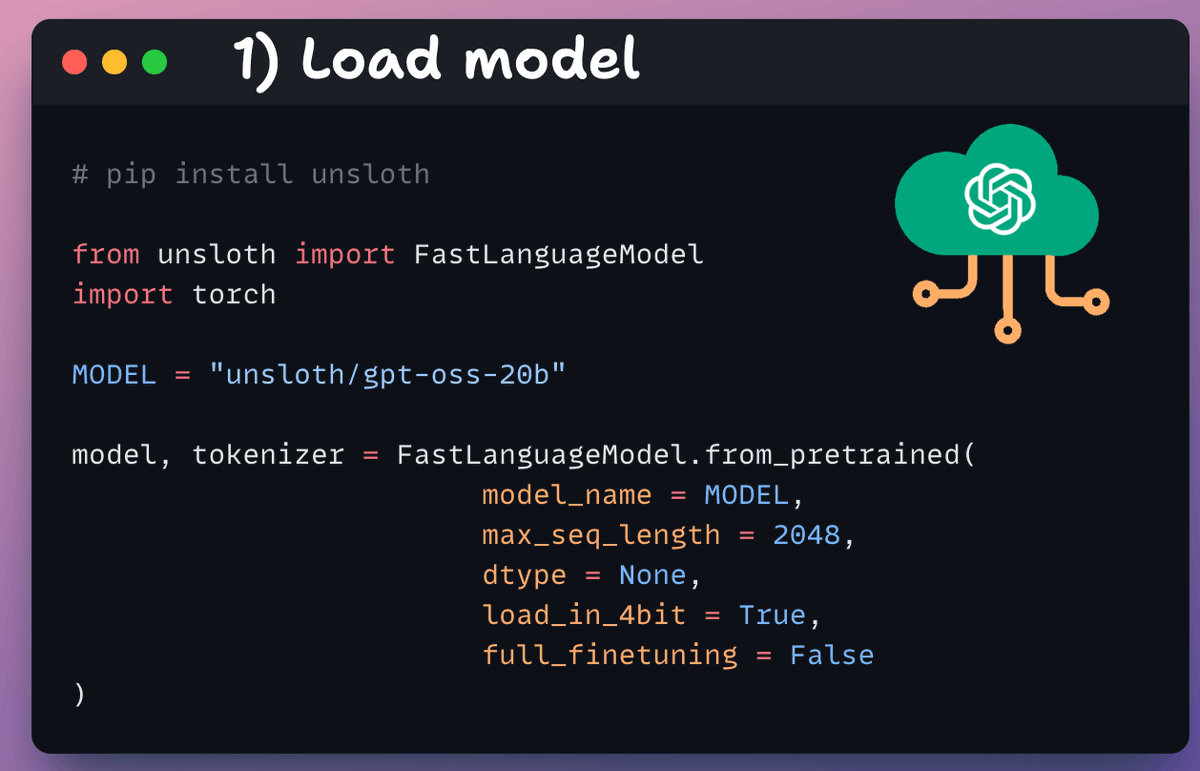

1️⃣ Load the model We start by loading the gpt-oss (20B variant) model and its tokenizer using Unsloth. Check this 👇

2️⃣ Define LoRA config We'll use LoRA for efficient fine-tuning. To do this, we use Unsloth's PEFT and specify: - The model - LoRA low-rank (r) - Layers for fine-tuning, etc. Check this code 👇

🚨 BREAKING: POKEMON BASE PACK WORTH $1500 JUST PULLED!