Arnab Sen Sharma @arnab_api

Ph.D. student @KhouryCollege, working to make LLMs interpretable arnab-api.github.io Boston, MA Joined September 2022-

Tweets49

-

Followers183

-

Following141

-

Likes181

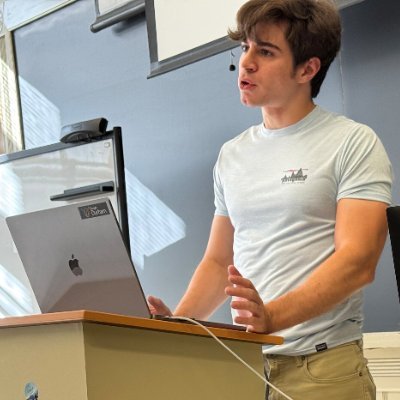

Who is going to be at #COLM2025? I want to draw your attention to a COLM paper by my student @sheridan_feucht that has totally changed the way I think and teach about LLM representations. The work is worth knowing. And you meet Sheridan at COLM, Oct 7!

Who is going to be at #COLM2025? I want to draw your attention to a COLM paper by my student @sheridan_feucht that has totally changed the way I think and teach about LLM representations. The work is worth knowing. And you meet Sheridan at COLM, Oct 7! https://t.co/L8TtQHFiqC

How do language models track mental states of each character in a story, often referred to as Theory of Mind? Our recent work takes a step in demystifing it by reverse engineering how Llama-3-70B-Instruct solves a simple belief tracking task, and surprisingly found that it…

💡 New ICLR paper! 💡 "On Linear Representations and Pretraining Data Frequency in Language Models": We provide an explanation for when & why linear representations form in large (or small) language models. Led by @jack_merullo_ , w/ @nlpnoah & @sarahwiegreffe

Lots of progress in mech interp (MI) lately! But how can we measure when new mech interp methods yield real improvements over prior work? We propose 😎 𝗠𝗜𝗕: a Mechanistic Interpretability Benchmark!

💨 A new architecture of automating mechanistic interpretability with causal interchange intervention! #ICLR2025 🔬Neural networks are particularly good at discovering patterns from high-dimensional data, so we trained them to ... interpret themselves! 🧑🔬 1/4

[📄] Are LLMs mindless token-shifters, or do they build meaningful representations of language? We study how LLMs copy text in-context, and physically separate out two types of induction heads: token heads, which copy literal tokens, and concept heads, which copy word meanings.

In case you ever wondered what you could do if you had SAEs for intermediate results of diffusion models, we trained SDXL Turbo SAEs on 4 blocks for you. We noticed that they specialize into a "composition", a "detail", and a "style" block. And one that is hard to make sense of.

Why is interpretability the key to dominance in AI? Not winning the scaling race, or banning China. Our answer to OSTP/NSF, w/ Goodfire's @banburismus_ Transluce's @cogconfluence MIT's @dhadfieldmenell resilience.baulab.info/docs/AI_Action… Here's why:🧵 ↘️

DeepSeek R1 shows how important it is to be studying the internals of reasoning models. Try our code: Here @can_rager shows a method for auditing AI bias by probing the internal monologue. dsthoughts.baulab.info I'd be interested in your thoughts.

More big news! Applications are open for the NDIF Summer Engineering Fellowship—an opportunity to work on cutting-edge AI research infrastructure this summer in Boston! 🚀

why do language models think 9.11 > 9.9? at @TransluceAI we stumbled upon a surprisingly simple explanation - and a bugfix that doesn't use any re-training or prompting. turns out, it's about months, dates, September 11th, and... the Bible?

why do language models think 9.11 > 9.9? at @TransluceAI we stumbled upon a surprisingly simple explanation - and a bugfix that doesn't use any re-training or prompting. turns out, it's about months, dates, September 11th, and... the Bible? https://t.co/8gzm89FJ0K

Hallucinations are a subject of much interest, but how much do we know about them? In our new paper, we found that the internals of LLMs contain far more information about truthfulness than we knew! 🧵 Project page >> llms-know.github.io Arxiv >> arxiv.org/abs/2410.02707

Attending @COLM_conf in Philadelphia! Drop by our poster on Wednesday morning (Session 5, 11 am - 1 pm, #8) Would love to catch up and chat about interpretability. Give me a DM!

Attending @COLM_conf in Philadelphia! Drop by our poster on Wednesday morning (Session 5, 11 am - 1 pm, #8) Would love to catch up and chat about interpretability. Give me a DM!

What should be the goal of unlearning in language models? In our new preprint we look at this question carefully and propose a new erasing method, "ELM," that erases knowledge from LLMs very cleanly. It is driven by three key goals - here is an explainer: 🧵👇

Thanks Tal! 📜 In this paper, we provide a theoretically grounded review of causal (which, imo, ⊇ mechanistic) interpretability. We argue that this gives a more cohesive narrative of the field, and makes it easier to see actionable open directions for future work! 🧵

Thanks Tal! 📜 In this paper, we provide a theoretically grounded review of causal (which, imo, ⊇ mechanistic) interpretability. We argue that this gives a more cohesive narrative of the field, and makes it easier to see actionable open directions for future work! 🧵

Thanks to my many great coauthors for essential contributions to this review! @BrinkmannJannik @millicent_li @saprmarks @kpal_koyena @nikhil07prakash @can_rager @arunasank @arnab_api @SunJiuding @ericwtodd @davidbau @boknilev More in the paper! 📜 arxiv.org/abs/2408.01416

Time to study #llama3 405b, but gosh it's big! Please retweet: if you have a great experiment but not enough GPU, here is an opportunity to apply for shared #NDIF research resources. Deadline July 30: ndif.us/405b.html You'll help @ndif_team test, we'll help you run 405b

Time to study #llama3 405b, but gosh it's big! Please retweet: if you have a great experiment but not enough GPU, here is an opportunity to apply for shared #NDIF research resources. Deadline July 30: ndif.us/405b.html You'll help @ndif_team test, we'll help you run 405b

NNsight and NDIF Democratizing Access to Foundation Model Internals The enormous scale of state-of-the-art foundation models has limited their accessibility to scientists, because customized experiments at large model sizes require costly hardware and complex engineering

Just reached Vienna to attend ICLR! Stop by our poster session tomorrow (10:45 am, Hall B, #131). Would love to chat with people about interpretability and AI alignment. DMs are open!

Just reached Vienna to attend ICLR! Stop by our poster session tomorrow (10:45 am, Hall B, #131). Would love to chat with people about interpretability and AI alignment. DMs are open!

Kevin Lu @kevinlu4588

40 Followers 91 Following Diffusion & pLM Research @ Northeastern (Bau Lab) @ NeurIPS 2025 Main Track Presentations!

Robert Scoble @Scobleizer

543K Followers 24K Following The best from ML/AI community | Ex-Microsoft, Rackspace, Fast Company | Wrote eight books about the future | Silicon Valley robots, holodecks, BCIs, & startups.

Mushfiqur Rahman @Mushfique83

39 Followers 871 Following

Yulu Qin @yulu_qin

163 Followers 448 Following

Ibrahim Adiza @linda7472297404

1 Followers 260 Following

Ibrahim Adiza @deborah2434312

1 Followers 291 Following

Alan Saji @AlanSaji2251

33 Followers 143 Following Research Associate @AI4Bharat Interests include AI interpretability and reasoning Prev: Axis Bank, IIT Madras

Md Adith Mollah (mr. ... @Adith082

5 Followers 133 Following csegrad @sustbd | Trustworthy ML and XAI enthusiast | Alumni of @aspire_leaders | Competitive Programmer | Content Creator @Youtube

Jung Hoon Son, M.D. @junghoon_sonMD

1K Followers 3K Following knowledge architect // pathologist, informaticist. we need to vote policy, not along political party.

Swati A @swatiardeshna

181 Followers 8K Following

henry castillo @henrycstllo

89 Followers 646 Following phd @tamu. prev: swe @stripe, bs @utaustin. i want to mechanistically understand models through the lens of training dynamics. 🇵🇪🏳️🌈

Vartan Polodyan @VarPol

196 Followers 6K Following

James Morrison Rubin @import_jmr

7K Followers 6K Following Product Lead | Google Gemini Prev: Launched @aws Trainium, @alexa99 Echo Show 5 Tweets are my own. Retweets are not endorsements. Joyful Learning Machines

Imranur Rahman @imranur7

109 Followers 795 Following PhD Student at NC State, Security and Privacy enthusiast, ex Samsung

Subramanyam Sahoo @iamwsubramanyam

197 Followers 4K Following Independent AI Safety researcher, M. Tech x Summa Cum Laude @NITHamirpurHP. BASIS Fellow @UCBerkeley, RA @HarvardAISafety. Get Published or Die Trying.

AI Safety Papers @safe_paper

2K Followers 224 Following Sharing the latest in AI safety research. "One who says they have no time to read papers will never read papers even when they have time a-plenty."

worst tweep eune @mmn3mm

723 Followers 324 Following I hacked a friend and it didn't feel bad at all. @r3billions

Spairsairs @SpairsairsEMk1

41 Followers 2K Following

David D. Baek @dbaek__

2K Followers 31 Following PhD Student @ MIT EECS / Mechanistic Interpretability, Scalable Oversight

Sarah Wiegreffe @sarahwiegreffe

5K Followers 1K Following Research in NLP (mostly LM interpretability & explainability). Assistant prof @umdcs @clipumd Formerly @allen_ai @uwnlp @icatgt @gtcomputing Views my own.

ineffable alias @joorosa12185462

158 Followers 8K Following

AI News International... @AINewsInt

1K Followers 7K Following 🔺Advancing The State Of Artificial Intelligence

Chris Wendler @wendlerch

544 Followers 852 Following PostDoc at Northeastern university; LAION contributor; I like deep learning & open source.

Enamul Hassan @enamcse

167 Followers 978 Following PhD Student @ CS, SBU, NY | Assistant Professor @ CSE, SUST, BD | Sports Programmer | Parent | Husband

Natalie Shapira @NatalieShapira

1K Followers 284 Following Tell me about challenges, the unbelievable, the human mind and artificial intelligence, thoughts, social life, family life, science and philosophy.

zkhani @zkhani7

2K Followers 3K Following

francesco ortu @francescortu

251 Followers 1K Following NLP & Interpretability | PhD Student @UniTrieste & Data Engineering Lab @AreaSciencePark | Prev @MPI_IS intern https://t.co/RM03tgnbJP

Michael Hanna @michaelwhanna

615 Followers 441 Following PhD student at the University of Amsterdam / ILLC, interested in computational linguistics and (mechanistic) interpretability. Current Anthropic Fellow.

Shun Shao 邵顺 @ShunShao6

50 Followers 367 Following Phd student @CambridgeLTL, MScR @EdinburghNLP @InfAtEd, MEng @ucl. Working on large language models, LLM Interpretability, Spectral learning.

Michael Toker @michael_toker

137 Followers 520 Following PhD candidate @Technion NLP lab - Developing explainability methods to gain a better understanding of Text to Image models

KN throwaway @Knthrowaway2222

6 Followers 167 Following

Mike Allton | AI for ... @mike_allton

51K Followers 8K Following AI for Creators & Solopreneurs | Automate your biz, reclaim your time. Host @ The AI Hat Podcast. Learn how below! 👇

Soda Fries @soda4fries

0 Followers 4 Following

Danial Namazifard @IamDanialNamazi

675 Followers 6K Following Graduate Researcher at University of Tehran #NLProc #MachineLearning

Froughtoa @Froughtoa2fyIw

65 Followers 7K Following

Amir H. Kargaran @amir_nlp

797 Followers 3K Following On job maket (Fall 2025) / 🤖 PhD student @CisLmu/ 🛠️ Multilingual NLP / Previous: Intern @huggingface. My views!

James Michaelov @jamichaelov

366 Followers 737 Following Postdoc @MIT. Previously: @CogSciUCSD, @CARTAUCSD, @AmazonScience, @InfAtEd, @SchoolofPPLS. Research: language comprehension, the brain, artificial intelligence

Mert İnan @Merterm

270 Followers 2K Following CS PhD candidate @Northeastern Cognitive-aware MM convAI interdisciplinarity lover @FulbrightPrgrm @SCSatCMU 🦋: @merterm.bsky.social

8jag @0x386a6167

99 Followers 2K Following

Prakash Kagitha @prakashkagitha

371 Followers 2K Following Research Assistant @DrexelUniv. Improving planning with LLMs. Previously, Lead Data Scientist @ https://t.co/yohjwHWaMY

Seytasa @seytasa47488

69 Followers 5K Following

Gary Marcus @GaryMarcus

194K Followers 7K Following “In the aftermath of GPT-5’s launch … the views of critics like Marcus seem increasingly moderate.” —@newyorker

Wes Gurnee @wesg52

3K Followers 228 Following Trying to read Claude’s mind. Interpretability at @AnthropicAI Prev: Optimizer @MIT, Byte-counter @Google

James Morrison Rubin @import_jmr

7K Followers 6K Following Product Lead | Google Gemini Prev: Launched @aws Trainium, @alexa99 Echo Show 5 Tweets are my own. Retweets are not endorsements. Joyful Learning Machines

Md Mahadi Hasan Nahid @mhn_nahid

561 Followers 2K Following CS PhD student @UAlberta 🍁 | | working on #NLProc #LLM | Father | Loves 👨🍳 ✈️ 🌎| 🇧🇩|🇨🇦|🇨🇳|🇮🇳|🇫🇷|🇵🇹|🇦🇪|🇲🇽

Enamul Hassan @enamcse

167 Followers 978 Following PhD Student @ CS, SBU, NY | Assistant Professor @ CSE, SUST, BD | Sports Programmer | Parent | Husband

DiscussingFilm @DiscussingFilm

2.6M Followers 819 Following Your leading source for quick reliable news. Home for healthy and liberating discussion on all things pop culture. (Affiliate links shared earn us commissions)

Sarah Wiegreffe @sarahwiegreffe

5K Followers 1K Following Research in NLP (mostly LM interpretability & explainability). Assistant prof @umdcs @clipumd Formerly @allen_ai @uwnlp @icatgt @gtcomputing Views my own.

David D. Baek @dbaek__

2K Followers 31 Following PhD Student @ MIT EECS / Mechanistic Interpretability, Scalable Oversight

Actionable Interpreta... @ActInterp

254 Followers 11 Following 🛠️ Actionable Interpretability🔎 @icmlconf 2025 | Bridging the gap between insights and actions ✨ https://t.co/4zRMTbzwDc

Chris Wendler @wendlerch

544 Followers 852 Following PostDoc at Northeastern university; LAION contributor; I like deep learning & open source.

Natalie Shapira @NatalieShapira

1K Followers 284 Following Tell me about challenges, the unbelievable, the human mind and artificial intelligence, thoughts, social life, family life, science and philosophy.

Josh Engels @JoshAEngels

1K Followers 117 Following Mech interp @GoogleDeepMind | on leave from my PhD @MIT. Let's use interp to make models safer today

Roger Beaty @Roger_Beaty

3K Followers 2K Following Associate Professor of Psychology @penn_state. Director of the Cognitive Neuroscience of Creativity Lab. President-elect @tsfnc.

Yoed Kenett @yoed_kenett

1K Followers 995 Following Assistant Professor at the Technion - Israel Institute of Technology. Studying High-level cognition, creativity, cognitive networks, knowledge

Open Philanthropy @open_phil

18K Followers 231 Following Open Philanthropy's mission is to help others as much as we can with the resources available to us.

Grant Sanderson @3blue1brown

414K Followers 362 Following Pi creature caretaker. Contact/faq: https://t.co/brZwdQfdif

Jan Leike @janleike

116K Followers 332 Following ML Researcher @AnthropicAI. Previously OpenAI & DeepMind. Optimizing for a post-AGI future where humanity flourishes. Opinions aren't my employer's.

Percy Liang @percyliang

85K Followers 420 Following Associate Professor in computer science @Stanford @StanfordHAI @StanfordCRFM @StanfordAILab @stanfordnlp | cofounder @togethercompute | Pianist

Stella Biderman @BlancheMinerva

17K Followers 812 Following Open source LLMs and interpretability research at @AiEleuther. She/her

Dan Hendrycks @DanHendrycks

42K Followers 111 Following • Center for AI Safety Director • xAI and Scale AI advisor • GELU/MMLU/MATH/HLE • PhD in AI • Analyzing AI models, companies, policies, and geopolitics

Ekdeep Singh @EkdeepL

2K Followers 1K Following Member of Technical Staff @GoodfireAI; Previously: Postdoc / PhD at Center for Brain Science, Harvard and University of Michigan

AK @_akhaliq

429K Followers 3K Following AI research paper tweets, ML @Gradio (acq. by @HuggingFace 🤗) dm for promo ,submit papers here: https://t.co/UzmYN5YmrQ

Michael Hanna @michaelwhanna

615 Followers 441 Following PhD student at the University of Amsterdam / ILLC, interested in computational linguistics and (mechanistic) interpretability. Current Anthropic Fellow.

NDIF @ndif_team

222 Followers 0 Following The National Deep Inference Fabric, an NSF-funded computational infrastructure to enable research on large-scale Artificial Intelligence. https://t.co/STsQ707an3

Adam Karvonen @a_karvonen

3K Followers 571 Following ML Researcher, doing MATS with Owain Evans. I prefer email to DM.

Yoshua Bengio @Yoshua_Bengio

26K Followers 211 Following Working towards the safe development of AI for the benefit of all @UMontreal, @LawZero_ & @Mila_Quebec A.M. Turing Award Recipient and most-cited AI researcher.

METR @METR_Evals

12K Followers 29 Following An AI research non-profit advancing the science of empirically testing AI systems for capabilities that could threaten catastrophic harm to society.

Yannick Assogba @tafsiri

1K Followers 440 Following Research Scientist @ Apple AI/ML. Prev: Google Brain / PAIR

Alex Loftus @AlexLoftus19

166 Followers 480 Following Our textbook is on amazon now! https://t.co/ayc3bMWVFt https://t.co/CUEOxFRDse | PhD student, Bau lab @ Northeastern. Studying LLM internals.

Diego Garcia-Olano @dgolano

601 Followers 971 Following Senior Research Scientist at @MetaAI MSL/GenAI Trust/Responsible AI. PhD @UTAustin 22. @IBMResearch 20, @GoogleAI 18/19, @datascifellows 16, @la_UPC 15

Thomas G. Dietterich @tdietterich

58K Followers 625 Following Distinguished Professor (Emeritus), Oregon State Univ.; Former President, Assoc. for the Adv. of Artificial Intelligence; Robust AI & Comput. Sustainability

Transluce @TransluceAI

8K Followers 15 Following Open and scalable technology for understanding AI systems.

Eric J. Michaud @ericjmichaud_

3K Followers 1K Following PhD student at MIT. Trying to make deep neural networks among the best understood objects in the universe. 💻🤖🧠👽🔭🚀

Hadas Orgad @ ICML @OrgadHadas

622 Followers 131 Following PhD student @ Technion | Focused on AI interpretability, robustness & safety | Because black boxes don’t belong in critical systems

James Michaelov @jamichaelov

366 Followers 737 Following Postdoc @MIT. Previously: @CogSciUCSD, @CARTAUCSD, @AmazonScience, @InfAtEd, @SchoolofPPLS. Research: language comprehension, the brain, artificial intelligence