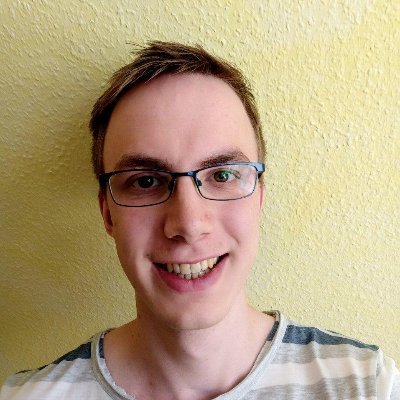

Erik Jenner @jenner_erik

Research scientist @ Google DeepMind working on AGI safety & alignment ejenner.com Joined April 2016-

Tweets183

-

Followers918

-

Following152

-

Likes552

MATS is a great opportunity to start your career in AI safety! For MATS 9.0 I'll be running a research stream together with @emmons_scott @jenner_erik and @zimmerrol If you want to do research on AI oversight and control, apply now!

MATS is a great opportunity to start your career in AI safety! For MATS 9.0 I'll be running a research stream together with @emmons_scott @jenner_erik and @zimmerrol If you want to do research on AI oversight and control, apply now!

🚀 Applications now open: Constellation's Astra Fellowship 🚀 We're relaunching Astra — a 3-6 month fellowship to accelerate AI safety research & careers. Alumni @eli_lifland & Romeo Dean co-authored AI 2027 and co-founded @AI_Futures_ with their Astra mentor @DKokotajlo!

Prior work has found that Chain of Thought (CoT) can be unfaithful. Should we then ignore what it says? In new research, we find that the CoT is informative about LLM cognition as long as the cognition is complex enough that it can’t be performed in a single forward pass.

The fact that current LLMs have reasonably legible chain of thought is really useful for safety (as well as for other reasons)! It would be great to keep it this way

The fact that current LLMs have reasonably legible chain of thought is really useful for safety (as well as for other reasons)! It would be great to keep it this way

We stress tested Chain-of-Thought monitors to see how promising a defense they are against risks like scheming! I think the results are promising for CoT monitoring, and I'm very excited about this direction. But we should keep stress-testing defenses as models get more capable

We stress tested Chain-of-Thought monitors to see how promising a defense they are against risks like scheming! I think the results are promising for CoT monitoring, and I'm very excited about this direction. But we should keep stress-testing defenses as models get more capable

As models advance, a key AI safety concern is deceptive alignment / "scheming" – where AI might covertly pursue unintended goals. Our paper "Evaluating Frontier Models for Stealth and Situational Awareness" assesses whether current models can scheme. arxiv.org/abs/2505.01420

Can frontier models hide secret information and reasoning in their outputs? We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

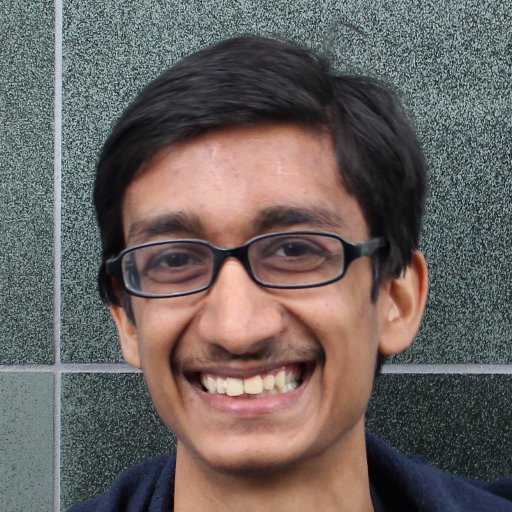

My @MATSprogram scholar Rohan just finished a cool paper on attacking latent-space probes with RL! Going in, I was unsure whether RL could explore into probe bypassing policies, or change the activations enough. Turns out it can, but not always. Go check out the thread & paper!

My @MATSprogram scholar Rohan just finished a cool paper on attacking latent-space probes with RL! Going in, I was unsure whether RL could explore into probe bypassing policies, or change the activations enough. Turns out it can, but not always. Go check out the thread & paper!

New episode with @davlindner, covering his work on MONA! Check it out - video link in reply.

New episode with @davlindner, covering his work on MONA! Check it out - video link in reply. https://t.co/CStTMbO83z

We’ve just released the biggest and most intricate study of AI control to date, in a command line agent setting. IMO the techniques studied are the best available option for preventing misaligned early AGIs from causing sudden disasters, e.g. hacking servers they’re working on.

IMO, this isn't much of an update against CoT monitoring hopes. They show unfaithfulness when the reasoning is minimal enough that it doesn't need CoT. But, my hopes for CoT monitoring are because models will have to reason a lot to end up misaligned and cause huge problems. 🧵

IMO, this isn't much of an update against CoT monitoring hopes. They show unfaithfulness when the reasoning is minimal enough that it doesn't need CoT. But, my hopes for CoT monitoring are because models will have to reason a lot to end up misaligned and cause huge problems. 🧵

UK AISI is hiring, consider applying if you're interested in adversarial ML/red-teaming. Seems like a great team, and I think it's one of the best places in the world for doing adversarial ML work that's highly impactful

UK AISI is hiring, consider applying if you're interested in adversarial ML/red-teaming. Seems like a great team, and I think it's one of the best places in the world for doing adversarial ML work that's highly impactful

Consider applying for MATS if you're interested to work on an AI alignment research project this summer! I'm a mentor as are many of my colleagues at DeepMind

Consider applying for MATS if you're interested to work on an AI alignment research project this summer! I'm a mentor as are many of my colleagues at DeepMind

🚨 New @iclr_conf blog post: Pitfalls of Evidence-Based AI Policy Everyone agrees: evidence is key for policymaking. But that doesn't mean we should postpone AI regulation. Instead of "Evidence-Based AI Policy," we need "Evidence-Seeking AI Policy." arxiv.org/abs/2502.09618…

🧵 Announcing @open_phil's Technical AI Safety RFP! We're seeking proposals across 21 research areas to help make AI systems more trustworthy, rule-following, and aligned, even as they become more capable.

🚀 Applications for the Global AI Safety Fellowship 2025 are closing on 31 December 2025! We're looking for exceptional STEM talent from around the world who can advance the safe and beneficial development of AI. Fellows will get to work full-time with leading organisations in…

David Krueger @DavidSKrueger

18K Followers 4K Following AI professor. Deep Learning, AI alignment, ethics, policy, & safety. Formerly Cambridge, Mila, Oxford, DeepMind, ElementAI, UK AISI. AI is a really big deal.

Miles Brundage @Miles_Brundage

62K Followers 12K Following AI policy researcher, wife guy in training, fan of cute animals and sci-fi, Substack writer, stealth-ish non-profit co-founder

Eugene Vinitsky (@RLC... @EugeneVinitsky

21K Followers 2K Following This is the site where I talk about the attacks on science and immigration. Science is on the other site. Lab website: https://t.co/vrtbcqRyRn

Taco Cohen @TacoCohen

27K Followers 3K Following Post-trainologer at FAIR. Into codegen, RL, equivariance, generative models. Spent time at Qualcomm, Scyfer (acquired), UvA, Deepmind, OpenAI.

davidad 🎇 @davidad

20K Followers 9K Following Programme Director @ARIA_research | accelerate mathematical modelling with AI and categorical systems theory » build safe transformative AI » cancel heat death

Lauro @laurolangosco

1K Followers 700 Following European Commission (AI Office). PhD student @CambridgeMLG. Here to discuss ideas and have fun. Posts are my personal opinions; I don't speak for my employer.

Dylan HadfieldMenell @dhadfieldmenell

4K Followers 2K Following Associate Prof @MITEECS working on value (mis)alignment in AI systems; @[email protected]; he/him

Cas (Stephen Casper) @StephenLCasper

6K Followers 4K Following AI technical gov & risk management research. PhD student @MIT_CSAIL, fmr. @AISecurityInst. I'm on the CS faculty job market! https://t.co/r76TGxSVMb

Nora Belrose @norabelrose

11K Followers 120 Following AIs aren't people, they're tools we should use wisely. Head of interpretability research at @AiEleuther, but tweets are my own views, not Eleuther's.

Rianne van den Berg @vdbergrianne

8K Followers 2K Following Principal research manager at Microsoft Research Amsterdam. Formerly at Google Brain and University of Amsterdam. PhD in condensed matter physics.

Tom Lieberum 🔸 @lieberum_t

1K Followers 196 Following Trying to reduce AGI x-risk by understanding NNs Interpretability RE @DeepMind BSc Physics from @RWTH 10% pledgee @ https://t.co/Vh2bvwhuwd

Stanislav Fort @stanislavfort

14K Followers 7K Following Building in AI + security | Stanford PhD in AI & Cambridge physics | ex-Anthropic and DeepMind | scientific progress + economic growth | 🇺🇸🇨🇿

Nathalie Kirch @n_lie_k

22 Followers 195 Following

diana Washington @dianawashingt0n

1K Followers 2K Following Coach • Ideas • Spreading Good Vibes Here to Inspire & Be Inspired ? • No Porn • No Bots — Blocked O #MAGA #Trump2025 #PatriotStrong

Vijay Bolina @vijaybolina

4K Followers 6K Following I build and lead deeply technical teams solving some of the hardest problems in the world. Prev CISO @GoogleDeepMind, @Mandiant, @BoozAllen, USG. Tweets my own.

Jenny Qu @GuanniQu

156 Followers 510 Following just learning to be hardcore @Caltech building AI to solve hard math problems she/they

Gabriel Quevedo 💯 @gabrielq111

11 Followers 110 Following

Simple Magic @Simplemagic_ai

6 Followers 140 Following

Quant Hustle @QuantHustle

0 Followers 4K Following

Sandeep More @sandyonmars

99 Followers 419 Following Decipher the complex. Connect the dots. ॥ॐ 🌏 🦋🪐🌌 ॥

Sandra Binoy @SandraBinoy2

0 Followers 60 Following

Andrew Bempah @KrumDonDada

25 Followers 3K Following Proud Kotobabian by way of Chicago & Stanford University

Rauno Arike @RaunoArike

29 Followers 296 Following Working on AI safety at Aether Research Previously @MATSprogram

Teetaj Pavaritpong @TeetajP

41 Followers 1K Following Software Engineer | AI/ML | UIUC ‘24 B.S. in Statistics & Computer Science @SiebelSchool

Anna Soligo @anna_soligo

172 Followers 133 Following PhD Student @imperialcollege // MATS Scholar with Neel Nanda // Sometimes found on big hills ⛰️

M Emre Özkan @memreozkan_

7 Followers 146 Following

Good1 @MLMusings

43 Followers 882 Following Angel Investor | 2x start up founder | Silicon Valley | Drinking the coolaid!

Evan Russek @evanrussek

949 Followers 2K Following Postdoc in cognitive science at Princeton. Interested in computational models of thinking and decision making.

Harshal Nandigramwar @hnanacc

419 Followers 918 Following ai research @intel, prev @cariad_tech, @Uni_Stuttgart • n&w @todacklabs (https://t.co/LPf3PWfbnK, https://t.co/WESRemr9Ev, corpus, marrow)

Hugo @Hugo007600

31 Followers 312 Following

Damon Falck @DamonFalck

19 Followers 108 Following AI safety & alignment @MATSprogram. Prev ML @UniofOxford, quant @DRWTrading.

Anaïs Berkes @anais_berkes

2 Followers 124 Following

prasad @Mahadikprasad15

1K Followers 4K Following Just Curious. Prev crypto @Delphi_Digital @MH_Ventures, current mech interp

Ali Larian @ali_larian

14 Followers 233 Following PhD student @ University of Utah @KahlertSoC | Robotics, Reinforcement Learning

Kyle @kylesuffolk

784 Followers 5K Following

Ed Henderson @edlucidstates

53 Followers 403 Following | Founder @ Lucid States - exploring what it would take to build AI that dreams. | ex-@itsjustvow | Aussie

Cezar @realcezarc

505 Followers 6K Following Software engineer, failed startup founder. Used to work @google, @onepeloton.

Sam @belkalevin84

121 Followers 2K Following

Shashank Kirtania @5hv5hvnk

465 Followers 2K Following pre doc research fellow @prosemsft | interested in AI & Formal Methods

Raymond Ng @Raymondng_aisg

8 Followers 1K Following

Evan Ellis @evandavidellis

142 Followers 768 Following Alignment and coding agents. AI @ BAIR | Scale | Imbue

monedula @velarus3

78 Followers 614 Following BUILDHUB Society - The society for builders - now live! CHECK IT OUT AT https://t.co/ktf6Ms3N7M 📣📣📣

Yasaman Ansari @Yasaman_Ansari

6 Followers 192 Following

Sahar Abdelnabi 🕊 @sahar_abdelnabi

2K Followers 790 Following Researcher @Microsoft | Next: Faculty @ELLISInst_Tue & @MPI_IS | ex. CISPA | Neurodiv. 🦋 | AI safety & security | life & peace for all ☮️🍉 Opinions my own.

Sachit Malik @isachitmalik

165 Followers 4K Following Hola | Security Engineering at Apple | Alum: Carnegie Mellon; IIT Delhi

thomas @thomazvu

453 Followers 2K Following guy who makes MMOs in the browser 🌎 https://t.co/hxuPqjv8IE 🤖 https://t.co/paQs8tsyHe

Wenx @imwendering

55 Followers 755 Following Independent AI Safety Researcher. Formerly @Meta Integrity Seasoned engineer and budding researcher. Occasionally appears in galleries with my paintings

Sharmake Farah (sharm... @SharmakeFarah14

271 Followers 651 Following Thinks AI risk is somewhat likely, and AI benefits huge if we can align AIs to someone that is willing to promote human thriving even when humans are useless.

𝐼𝓃𝒹𝑜𝓂�... @de_indomitus

1 Followers 400 Following Neural Nets Whisperer | Weaver of Vision, Language, Sound, & Thought | Hunter of Deep Emergent Truth | Binding Order from Chaos | Designing Steerable Minds

Tomas Tulka 💙💛 @tomas_tulka

136 Followers 876 Following Programmer and technical author. Also, I am interested in math, nature, and fine art.

Richard Ngo @RichardMCNgo

64K Followers 2K Following studying AI and trust. ex @openai/@googledeepmind

Andrej Karpathy @karpathy

1.4M Followers 1K Following Building @EurekaLabsAI. Previously Director of AI @ Tesla, founding team @ OpenAI, CS231n/PhD @ Stanford. I like to train large deep neural nets.

Eliezer Yudkowsky ⏹... @ESYudkowsky

209K Followers 102 Following The original AI alignment person. Understanding the reasons it's difficult since 2003. This is my serious low-volume account. Follow @allTheYud for the rest.

David Krueger @DavidSKrueger

18K Followers 4K Following AI professor. Deep Learning, AI alignment, ethics, policy, & safety. Formerly Cambridge, Mila, Oxford, DeepMind, ElementAI, UK AISI. AI is a really big deal.

Neel Nanda @NeelNanda5

32K Followers 123 Following Mechanistic Interpretability lead DeepMind. Formerly @AnthropicAI, independent. In this to reduce AI X-risk. Neural networks can be understood, let's go do it!

Amanda Askell @AmandaAskell

54K Followers 657 Following Philosopher & ethicist trying to make AI be good @AnthropicAI. Personal account. All opinions come from my training data.

Google DeepMind @GoogleDeepMind

1.2M Followers 279 Following We’re a team of scientists, engineers, ethicists and more, committed to solving intelligence, to advance science and benefit humanity.

davidad 🎇 @davidad

20K Followers 9K Following Programme Director @ARIA_research | accelerate mathematical modelling with AI and categorical systems theory » build safe transformative AI » cancel heat death

Grant Sanderson @3blue1brown

414K Followers 362 Following Pi creature caretaker. Contact/faq: https://t.co/brZwdQfdif

Lauro @laurolangosco

1K Followers 700 Following European Commission (AI Office). PhD student @CambridgeMLG. Here to discuss ideas and have fun. Posts are my personal opinions; I don't speak for my employer.

Kelsey Piper @KelseyTuoc

49K Followers 971 Following We're not doomed, we just have a big to-do list.

Cas (Stephen Casper) @StephenLCasper

6K Followers 4K Following AI technical gov & risk management research. PhD student @MIT_CSAIL, fmr. @AISecurityInst. I'm on the CS faculty job market! https://t.co/r76TGxSVMb

Nora Belrose @norabelrose

11K Followers 120 Following AIs aren't people, they're tools we should use wisely. Head of interpretability research at @AiEleuther, but tweets are my own views, not Eleuther's.

Rob Wiblin @robertwiblin

45K Followers 772 Following Host of the 80,000 Hours Podcast. Exploring the inviolate sphere of ideas one interview at a time: https://t.co/2YMw00bkIQ

Tom Lieberum 🔸 @lieberum_t

1K Followers 196 Following Trying to reduce AGI x-risk by understanding NNs Interpretability RE @DeepMind BSc Physics from @RWTH 10% pledgee @ https://t.co/Vh2bvwhuwd

Connor Leahy @NPCollapse

26K Followers 574 Following CEO @ConjectureAI - Ex-Head of @AiEleuther - Leave me anonymous feedback: https://t.co/OJWQWKNrHk - I don't know how to save the world, but dammit I'm gonna try

Ziyue Wang @ZyWang25

51 Followers 221 Following Research Engineer @ Google DeepMind working on AGI Safety.

Victoria Krakovna @vkrakovna

10K Followers 503 Following Research scientist in AI alignment at Google DeepMind. Co-founder of Future of Life Institute @flixrisk. Views are my own and do not represent GDM or FLI.

Rohan Gupta @RohDGupta

14 Followers 93 Following

Joshua Clymer @joshua_clymer

2K Followers 118 Following Turtle hatchling trying to make it to the ocean. I work at Redwood Research.

Xander Davies @alxndrdavies

2K Followers 728 Following safeguards lead @AISecurityInst | PhD student w @yaringal at @OATML_Oxford | prev @Harvard (https://t.co/695XYMKqjI)

Jasmine @j_asminewang

7K Followers 1K Following alignment @OpenAI. past @AISecurityInst @verses_xyz @kernel_magazine @readtrellis @copysmith_ai

Lighthaven PR Departm... @LighthavenPR

888 Followers 12 Following The official twitter of the Lighthaven PR Department.

bilal @bilalchughtai_

818 Followers 662 Following interpretability & ai safety @ google deepmind | cambridge mmath

DeepSeek @deepseek_ai

972K Followers 0 Following Unravel the mystery of AGI with curiosity. Answer the essential question with long-termism.

Nathan Labenz @labenz

16K Followers 3K Following AI Scout, building text-2-video @Waymark, host of The Cognitive Revolution podcast

Ryan Greenblatt @RyanPGreenblatt

6K Followers 4 Following Chief scientist at Redwood Research (@redwood_ai), focused on technical AI safety research to reduce risks from rogue AIs

Davis Brown @davisbrownr

452 Followers 974 Following Research in science of {AI security, safety, DL}. PhD student at UPenn & RS at @PNNLab

Matej Jusup @MatejJusup

200 Followers 180 Following A PhD in multi-agent RL at ETH Zurich and a chess enthusiast (2585 Elo @Chesscom) who developed an LM @GoogleDeepMind capable of playing the game (3200 Elo).

Alex Serrano @sertealex

28 Followers 237 Following AI research | Prev. Research Intern @CHAI_Berkeley @Google

Luke Bailey @LukeBailey181

369 Followers 278 Following CS PhD student @Stanford. Former CS and Math undergraduate @Harvard.

SoLaR @ NeurIPS2024 @solarneurips

267 Followers 0 Following NeurIPS2024 workshop for Socially Responsible Language Modelling Research

Impact Academy @aisafetyfellows

202 Followers 33 Following We're a startup that runs cutting-edge fellowships to enable global talent to use their careers to contribute to the safe and beneficial development of AI.

Patrick McKenzie @patio11

185K Followers 802 Following I work for the Internet and am an advisor to @stripe. These are my personal opinions unless otherwise noted.

Jesse Smith @JesseTayRiver

1K Followers 609 Following BJJ brown belt and effective altruist torn between x-risk & global dev. Building diagnostics, collapse resilience & post-apocalyptic skills.

Daniel Filan 🔎 @freed_dfilan

797 Followers 593 Following This is my personal / non-professional account. My professional account is @dfrsrchtwts.

Micah Carroll @MicahCarroll

1K Followers 688 Following AI PhD student @berkeley_ai /w @ancadianadragan & Stuart Russell. Working on AI safety ⊃ preference changes/AI manipulation.

The Midas Project Wat... @SafetyChanges

1K Followers 1 Following We monitor AI safety policies and web content for unannounced changed. Anonymous submissions: https://t.co/5Ke9mIqh3e Run by @TheMidasProj

OpenAI Newsroom @OpenAINewsroom

112K Followers 3 Following The official newsroom for @OpenAI. Tweets are on the record. If you like this account, you’ll love our blog: https://t.co/nEYf8Iq3C0

Adrià Garriga-Alonso @AdriGarriga

1K Followers 595 Following Research Scientist at FAR AI (@farairesearch), making friendly AI.

carl feynman @carl_feynman

4K Followers 259 Following I’ve spent a lifetime switching my Special Interest every year or two. By now I’m surprisingly knowledgeable in a lot of fields— a skill now obsoleted by AI.

Cem Anil @cem__anil

3K Followers 2K Following Machine learning / AI Safety at @AnthropicAI and University of Toronto / Vector Institute. Prev. @google (Blueshift Team) and @nvidia.

Alex Mallen @alextmallen

393 Followers 279 Following Redwood Research (@redwood_ai) Prev. @AiEleuther

Andrew Lampinen @AndrewLampinen

9K Followers 2K Following Interested in cognition and artificial intelligence. Research Scientist @DeepMind. Previously cognitive science @StanfordPsych. Tweets are mine.

Jordan Taylor @JordanTensor

368 Followers 1K Following Working on new methods for understanding machine learning systems and entangled quantum systems.

Emmett Shear @eshear

119K Followers 970 Following CEO of Softmax: Applied developmental cybernetics research

Kayo Yin @kayo_yin

15K Followers 697 Following PhD student @berkeley_ai @berkeleynlp. AI alignment & signed languages. Prev @carnegiemellon @polytechnique, intern @msftresearch @deepmind. 🇫🇷🇯🇵

Trenton Bricken @TrentonBricken

12K Followers 2K Following Trying to figure out what makes minds and machines go "Beep Bop!" @AnthropicAI

Grace (cross posting ... @kindgracekind

5K Followers 2K Following ideonomist, ai navel gazer, skyborg https://t.co/UGyhDIKCaj

Fred Zhang @FredZhang0

1K Followers 508 Following research scientist @googledeepmind, prev phd @berkeley_eecs, DM open

The Cultural Tutor @culturaltutor

1.7M Followers 69 Following I've written a book, and you can get it here:

Jascha Sohl-Dickstein @jaschasd

25K Followers 717 Following Member of the technical staff @ Anthropic. Most (in)famous for inventing diffusion models. AI + physics + neuroscience + dynamics.

John Schulman @johnschulman2

65K Followers 1K Following Recently started @thinkymachines. Interested in reinforcement learning, alignment, birds, jazz music

Yong Zheng-Xin (Yong) @yong_zhengxin

2K Followers 2K Following safety and reasoning @BrownCSDept || ex-intern/collab @AIatMeta @Cohere_Labs || sometimes write on https://t.co/cXhbz6Fx3t